consciousness and the sausage factory

Peter Higgs, of Higgs boson [1] fame, recently gave an interview to the Guardian which generated some interesting reflections on modern academia.

Higgs believes no university would employ him in today’s academic system because he would not be considered “productive” enough [and] because of the expectations on academics to collaborate and keep churning out papers. “It’s difficult to imagine how I would ever have enough peace and quiet in the present sort of climate to do what I did in 1964.”

Professor Higgs was at Edinburgh University during the late fifties and early sixties, doing the kind of solitary, single-minded theoretical work that would eventually lead to his proposal of the Higgs mechanism, but which in the intervening time led to little or no published output.

Professor Higgs was at Edinburgh University during the late fifties and early sixties, doing the kind of solitary, single-minded theoretical work that would eventually lead to his proposal of the Higgs mechanism, but which in the intervening time led to little or no published output.

It seems he came perilously close to being forced out of academia, even after he had produced the 1964 paper which introduced the Higgs boson to the world.

Higgs said he became “an embarrassment to the department when they did research assessment exercises”. A message would go around the department saying: “Please give a list of your recent publications.” Higgs said: “I would send back a statement: ‘None’.”

Higgs believes he would almost certainly have been sacked had he not been nominated for the Nobel Prize in 1980. After that, Edinburgh apparently took the view that it was just about worth retaining him as he might get it – and that if he didn’t, “we can always get rid of him”.

Professor Higgs seems not to have a very high opinion of the new academic culture.

“After I retired it was quite a long time before I went back to my department. I thought I was well out of it. It wasn’t my way of doing things any more. Today I wouldn’t get an academic job. It’s as simple as that. I don’t think I would be regarded as productive enough.”

Some of the online comments on the Guardian article make additional points. There is recognition of the problem of getting work published if it departs too radically from the prevailing consensus, or if it arouses the resentment of a key researcher in the field, especially if they also happen to be the reviewer. On the other hand, one commenter argues the real problem of publishing papers is that it’s too easy.

The real problem is how easy it is to get papers published: it does have to be slightly novel, reasonably written, well referenced, and relevant – but these standards are lax, and applied quasi-randomly by the volunteer brigade of anonymous reviewers.

I suspect both complaints have substance. If you know how to play the game, and crank the handle, it probably is quite easy to get published. The average standard applied by reviewers is fairly mediocre. If you have to review work that is high on technical style but relatively low on substance – as much current work is – that is probably unavoidable. Reviewers do not have time to plough through detail when the logic used is abstruse, or hidden behind jargon or exotic maths.

On the other hand, if you don’t play by the rules, your chances of publication drop considerably, and the fact that you’re making what may turn out to be a major contribution is unlikely to compensate for that.

The same commenter seems to believe that the winners in the publication race are those who are particularly keen to get on.

[The publications process] is mainly useful for identifying highly ambitious researchers who have no qualms in submitting as many papers as they can churn out.

I don’t think that’s so great myself. However, I’d agree that the ambitious types will get a whole lot more research done. The quality, however, is another matter [...]

In short: don’t blame those ambitious types: that’s life.

The current process may well favour certain types who are more ambitious or competitive (in some sense) than their rivals. But there are different kinds of ambition or competitiveness. A welfare state, for example, creates its own kind of Darwinist system – i.e. the cleverest, most determined at exploiting the system will tend to do the best out of it. I suppose one could argue that this is a potential strength of having a complex welfare system. Qualities of cunning and resourcefulness are encouraged, and these qualities may later prove useful to society.

However, if you replace one kind of competitive drive (a desire to build a successful business; a determination to discover something useful) with another (a desire to exploit loopholes; a drive to achieve maximum insertions into journals), there is likely to be a shift in the precise characteristics that you are promoting.

A popular approach, whenever the condition of academia (or any other area of culture) is bemoaned, is to put the blame on materialism, as in the case of this comment:

Somewhere, we decided to value quantity over quality. In politics and economics, this equates to championing GDP and economic growth over quality of life. Unfortunately this line of thought has seeped into academia [...] people in charge of funding began to interpret a thick stack of published papers as having more potential value to the economy [...]

I would name the Thatcher/Reagan era as the time in which this neoliberal viewpoint became dominant, at least in the West.

The commenter, in common with many academics, seems to be confusing two things:

(i) society demanding accountability, proof of value for money, etc, and the use of crude metrics to determine these;

(ii) the pursuit of monetary profit, in contrast to supposedly more worthy (but nebulous) aims such as benefiting stakeholders.

Perhaps under the influence of egalitarian ideology, which condemns (ii) but has sympathy with (i), people who think of themselves as liberal tend to avoid noticing that so-called democratisation can have destructive effects on culture, and to conflate issues about measurement with issues about money. This of course makes it easier to blame that favourite scapegoat, capitalism.

Here is a comment which expresses the viewpoint that it’s unreasonable for academics to expect to avoid having to ‘engage’ with society.

Nobody is saying academics can’t continue to work on whatever they like, but why should society pay for them to play in sandpits without any responsibility except printing papers 80% of which are never read.

There are people struggling to make a living and look after their children and people who are struggling to live without pain and with dignity. There’s an ageing population that need help. Please show some empathy and compassion.

One commenter agrees with Higgs’s criticisms of the new academia, based on his own experience.

I had a university career from 1966 to 1994 and witnessed the takeover by people I call “academic bean counters,” aided in later years by computer monitoring. They have no deep understanding of the research they are assessing. Another name for the process is “Publish or die.”

The justification I always heard was ‘accountability’: we academics have to demonstrate to the taxpayers who fund us and to their politicians that we earn our money.

It’s a tough argument to counter [...]

Another commenter accepts the need for Higgs types to find room in academia, but argues that not everyone can be like that.

the university system as a whole can’t support this model on a large scale, and shouldn’t aim to. There are a couple of examples where it pays huge dividends [...] but it’s an extremely inefficient way to produce good research in general.

There are many academics who see themselves as capable of such individualistic successes, and might expend great effort in isolated pursuit of this, but very few who actually are capable of it.

Pointing out that only a tiny proportion of scientists are capable of what this commenter calls “individualistic successes” seems to beg the question, what is the point of all the others? Certainly, there is an argument for having a few scientists working on expanding the scope and application of the model that’s dominant at a particular time. But tens of thousands of them?

Do we really, as is sometimes argued, inhabit a world so different in terms of our position on the ignorance-knowledge spectrum from that of a century ago that the optimal path to further progress now necessarily involves hundreds of mega-teams, and few if any individual innovators? Or is the difference between then and now more a consequence of the change in our view of society and the change in our politics?

I have no wish to denigrate Peter Higgs’s contribution. The Higgs field was a crucial element in what has become the centrepiece of modern theoretical physics: the Standard Model. It needed a stroke of brilliance to come up with what is now referred to as the Higgs mechanism. Nevertheless, it is probably best regarded as an addition rather than a revolution. It’s more de Broglie wave than Einstein tensor.

I have no wish to denigrate Peter Higgs’s contribution. The Higgs field was a crucial element in what has become the centrepiece of modern theoretical physics: the Standard Model. It needed a stroke of brilliance to come up with what is now referred to as the Higgs mechanism. Nevertheless, it is probably best regarded as an addition rather than a revolution. It’s more de Broglie wave than Einstein tensor.

So if the argument about lack of room for people to make major intellectual leaps applies to the Higgs field, how much more likely is it to apply to anything really big – an overhaul of the theory of evolution, say? If there were someone capable of a Darwinian revolution, would they find any doors open to them? Or have we reached the age where science must invariably mean the image of the humble team member plugging away with his stained slides, cloud chamber plates or supercomputer readouts, making his tiny contribution to the matrix?

Let us take an example, from another subject. Consider, for purposes of illustration, the mind-body problem; more specifically, the problem of consciousness. Let’s assume there could be a revolutionary new way of thinking about this time-honoured issue, and that all it would take is the right person working under the right conditions for us to finally make a breakthrough – after centuries of interesting, but ultimately unprogressive, musings and meanderings.

Does the present system allow for such a thing to happen?

Let us digress briefly to consider what is meant by “the problem of consciousness”.

Humans believe that they have, by now, an excellent understanding of the physical world. Admittedly there are gaps (how did life begin? why is the electron-proton mass ratio 1:1836?) but it’s generally assumed these are soluble in terms of existing concepts.

The more we seem to understand our physical environment and to be able to manipulate it, however, the more it throws into relief that there is something (“experience”, “mind”, “consciousness”) which seems to be part of reality, related to individual humans and possibly animals too, but for which there seems to be no room in the prevailing physical model.

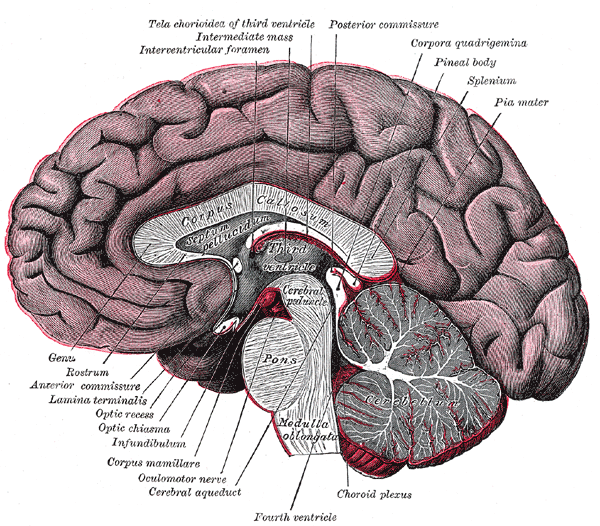

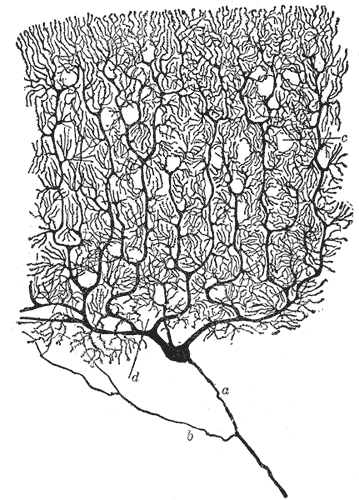

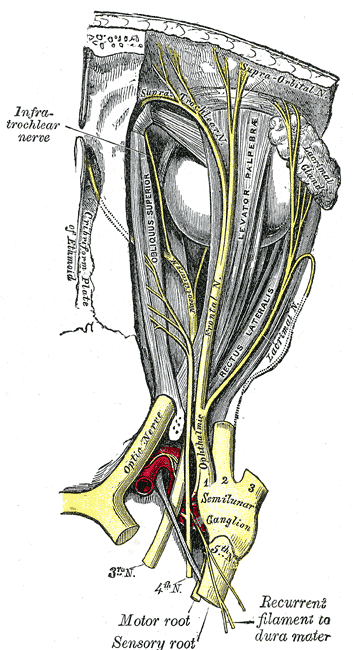

Whole books are nowadays written about the topic of consciousness. Some raise the question of whether all animals are conscious, or only some. Others speculate whether a sufficiently sophisticated robot with perceptual apparatus and transistorised cognition could be conscious, and at what level of sophistication this would occur. Numerous theories have been proposed about how consciousness might arise from neuronal activity. Various ideas have been suggested as to what the function of consciousness might be, if it is not a mere epiphenomenon.

In spite of all this speculation there is a sense in which the basic problem – of how an intrinsically non-physical thing appears to arise out of physical matter – has not been advanced since the time of Descartes.

Whatever consciousness enthusiasts may like to think, the topic is fraught with difficulties. Even if twentieth-century linguistic philosophers were wrong to assert that nothing meaningful could be referred to by a term so vague, there remains the conundrum of how the meaning of the word is supposed to be learnt [2]. Before one can enquire about the relation of ‘consciousness’ to our current model of the physical world, one needs to be clear about what the word means, and how the meaning is established. And the answer one gets if one thoroughly explores these fundamentals may be negative. The word may not in fact refer to anything clearly enough to be able to ask questions about it, let alone give answers.

The fundamentals need to be explored by someone with the ability and desire to think about something extremely abstract in a non-common-sense way. As a problem, this one is as challenging as they come, and possibly some way ahead, in terms of difficulty, of the insight that there may be no such thing as simultaneity.

Humans thinking about consciousness is a little like a mirror trying to think about what it means to reflect. This doesn’t necessarily imply, as some philosophers have argued, that we are hard-wired to find it an impossible topic to think about (though that is not to be ruled out), but it is likely to be beyond the capacity of most academics.

Cartoonists picture Einstein calmly contemplating the stars as he puffs on his pipe [...] Apparently [this image] is what Einstein wanted, because he did his best to hide his turbulent nature.

Once, however, an interviewer caught him off guard. Then he admitted that his urge to understand the universe kept him in a state of “psychic tension ... visited by all sorts of nervous conflicts ... I used to go away for weeks in a state of confusion ...” [3]

What sort of qualities would it take to get anywhere with the mind-body problem when so many others have tried and failed? I think four things are likely to be necessary.

Many people would put intellectual ability as the first – and perhaps only – quality required. One’s IQ probably needs to be higher than that of the average current academic. (That, of course, is not saying a lot.) On the other hand, I am not sure it needs to be as high as that of, say, the average applied mathematician working in rocket-science finance.

Incidentally, an exceptional innovator can easily appear less than brilliant, at least in certain contexts. He or she may appear to be slow on the uptake, hopelessly impractical, or useless at flirting (for example). This may reflect specialisation of brain function, in some cases chosen; or just lack of interest.

Incidentally, an exceptional innovator can easily appear less than brilliant, at least in certain contexts. He or she may appear to be slow on the uptake, hopelessly impractical, or useless at flirting (for example). This may reflect specialisation of brain function, in some cases chosen; or just lack of interest.

It seems to me three other qualities are likely to be at least as important as intelligence.

● Having the necessary drive and tenacity. Like Peter Higgs, if the person sets out to solve a problem, they need to be stubborn about doing so until they think they’ve cracked it.

● You have to be willing to sacrifice one mode of thought for another. Ordinary life may not contain many obvious intellectual challenges, but it can require high amounts of brain power, particularly when trying to interact purposefully with other people. (It has been suggested that the human brain expanded in order to keep up with the complexities of living in a group.) If you devote the maximum possible brain resources to tackling an abstract scientific problem, you may well have a less-than-optimal amount available for normal day-to-day things, and this may make you appear somewhat ridiculous in a social setting. That is something you would have to tolerate, if you were to devote yourself to a particular topic. (This, rather than some form of innate autism, may be what is

behind the speculation that people like Newton and Einstein suffered from Asperger syndrome.)

● You have to really want to solve the problem, and that means your emotions have to be involved. It’s not that you can’t have any other interests in life, but you probably have to want it more than you want a relationship, or peer approval, or a family, or an interesting social life. In other words, you have to be (and be prepared to be seen to be) obsessional, a quality that is unlikely to gain you many admirers.

Motivation is underestimated as a factor in many areas of achievement, particularly perhaps in the intellectual arena. Sometimes it is ignored as a factor altogether. Or, if it is acknowledged, it’s often in a negative sense. Many egalitarianism-inspired analyses are based on the idea that if differences of outcome derive from some people wanting things more than others, these are less ‘fair’ than differences deriving from innate characteristics other than desire. That is, if innate characteristics are allowed for at all.

What about the issue of training and experience? Many people would claim that one can only solve a specialist problem of this kind by having a thorough grounding in the relevant technical areas – brain physiology, information theory, philosophy of mind. One occasionally gets lucky amateurs, it is said; but perhaps not these days, at least not in scientific topics that are already highly advanced and where research typically requires expensive equipment and elaborate techniques?

If I was personally allocating money to solving a specific problem, and I thought that the four requirements above had been met – and that the candidate was of reasonably sound mind and body – I wouldn’t worry about that one. True, it might be a good idea to find out everything there is to know about the topic, including the latest cutting-edge research. On the other hand, I would trust the candidate to know how best to approach the problem; what to focus on, and what to ignore. Whatever is worth knowing about could, by someone sufficiently clever and motivated, be picked up surprisingly quickly. If empirical work is required – to collect data or test hypotheses – one could always hire a research team.

What about the various scientific disciplines beavering away at the problem of brain functioning? Aren’t they making good progress all the time, and isn’t it likely that before long they will – with the kind of people they have at the moment – achieve the final link, i.e. between (a) the top-level cerebral activity which binds all the input, suitably digested and schematised, into a stream of information from which the organism’s decisions can be made (still purely a physical process, if somewhat abstract) and (b) ‘consciousness’ itself?

I don’t deny that understanding of the brain is continuously advancing, though somewhat slowly. In some ways the progress has been disappointing. Forty years ago, one could have hoped that by now, we would be a lot further with (for example) the representation problem: how the brain recognises perceptual ensembles as things, and how it assigns meaning. Still, given another hundred years or so, we should be a lot closer so solving these issues, even at the present rate. (Though I wonder whether even that will require one or two revolutionary leaps, perhaps in computer science rather than in physiology. It seems likely that the brain uses a kind of top-level processing that is different from anything currently found in computers.)

But even if we ‘solve’ the architecture of the brain, we will be left (apparently) with the raw consciousness problem. What is the relationship between the model of the world which the brain generates ‘for’ the conscious decision-maker, and the phenomenological experience of consciousness per se?

A patron hoping to promote a solution to the consciousness problem may look to established neurophysiologists and psychologists with years of experimental work and publications under their belt, some of whom must have been thinking about this issue, however allusively, for decades. Mightn’t one of them come up with the required revolution? Well, they always might. Indeed, some of them have written books in which they sound as if they think they have.

The likely problem with this is that an intellectual revolution requires someone without too many preconceptions, commitments or vested interests. Someone who hasn’t spent years accepting and redistributing the received wisdom. It may turn out to be some key assumption that is precisely the thing blocking clarity, and yet also the one thing that the various rival theorists are currently agreed on.

The suggestion that

(a) membership of, and identification with, the establishment can be a hindrance to generating innovation

should not of course be confused with the notion that

(b) it is beneficial for the productivity of innovators that they be forced to operate under restricted conditions.

Culture requires leisure; and cultural production requires, in many cases, a level of leisure so high that it is only possible with institutional support, or with private capital – preferably both.

Part 2 of this article will look at the relationship of potential innovators to the university system, and the likely conditions that are required for ‘revolutionary’ progress.

The sciences (like the arts) require patronage; which implies individual judgment, and backing that judgment. Using private funding simply to reinforce pre-existing social success generates kudos for funders, but is unlikely to provide what is missing from today’s research world.

Technology billionaires had to avoid groupthink when they started out. There is surely no need for them to abandon critical faculties outside their comfort zone.

One of the powers of wealth is that one is not obliged to seek majority approval. It’s a power that should be used to good purpose rather than wasted – more than ever in today’s world of collectivism and conformity.

1. Although the Higgs boson, and its discovery, are what has received public attention, the more important concept proposed by Higgs (also in the 1960s) was the Higgs field – a field permeating all of space which allows for a certain kind of symmetry breaking and which explains why some particles have mass. Detection of the Higgs boson provides empirical support for the Higgs field.

2. Consider a group of evolving social robots. We might expect these to acquire (eventually) the ability to communicate to one another information about their environment, and also about their internal states (e.g. disease or other malfunctioning). With time, they are likely to learn to distinguish between internal states that map to genuine external objects (‘perception’) and spurious versions of such states caused by glitches in the machinery (‘hallucinations’).

From there we would expect them to refine their language to include terms that refer simply to internal would-be perceptual states per se, leaving moot the question of whether these correspond to external reality in a particular case. Thus, they might come to talk of “sense data”.

“Greetings, Robot X527. My perceptual processing sensors appear to be registering an apple tree up ahead. However, I ingested some dodgy water last night, so it may just be my circuits playing up.”This doesn’t however seem to get us to what we normally mean by “consciousness”. Nor does it amount to the robots describing their internal states qua internal states, or forming the concept of qualia.

Now assume that such robots do become ‘conscious’. It is nevertheless difficult to see how they would learn to use a word to refer to it. This raises the question, how do we learn to use the word? How do we ever have sufficient shared awareness of what is meant by it (supposedly, something over and above the behavioural signs it correlates with) to agree on the meaning?

3. Denis Brian, Einstein, John Wiley, 1996, p.60.